Project: Deep Q-Learning Agent

· 2 min read

Overview

- Created a Gym environment of a simple third-person shooter game in Python

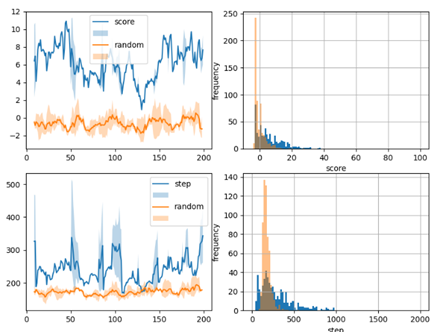

- Implemented a simple Deep-Q Network with PyTorch to train agents to master at the game (left image)

- Fine-tuned the hyperparameters of the agent, achieving average kill streak of 7 (right image, top) and lengthend the survival duration by 4 times (right image, bottom), which significantly better than the random baseline of 0.22 kills on average.

- Explored how deep-Q learning models handle a variable quantity of moving objects, i.e. the bullets and enemies, and relevant adjustments to the reward functions and representations of the observation space needed.

Game Design

- Survive a zombie apocalypse by controlling a snowball-throwing character.

- Goal: Survive as long as possible while killing zombies.

- Player has limited vision range.

- Game ends when a zombie touches the player.

- Image: Human Gameplay; Art: Myself; AI training: Myself; Observation and Reward Design: Myself & Jerry; Game Code: Jerry & Myself

Model

- Deep Q-Network (DQN) model for agent training.

- 3-layer feedforward neural network in TensorFlow.

- Uses experience replay with batch training.

- Epsilon-decay strategy for interaction.

Results

- Tested multiple decay factors (gamma).

- Adjusted rewards to improve agent's learning on long-term dependencies.

- Achieving an average kill streak of 7 and quadrupling survival time, far surpassing the random baseline of 0.22 kills on average.

Notable Behaviors (1)

- AI learns to control its orientation for precise shooting.

- AI refines orientation control to improve shooting accuracy.

Notable Behaviors (2)

- AI learns to evade zombies by retreating to the map corner.

- AI retreats to a corner for better firing coverage.

Notable Behaviors (3)

- AI adopts a spinning and frequent shooting strategy.

- AI spins and shoots frequently to maximize hits.

Future Directions

- Further training needed due to time constraints of this project.

- Interest in refining rewards and exploring new mechanics in near future.