Project: GAN Generation

· 2 min read

Backup of GAN Learning Project (August 2022)

[!NOTE] The project explores various GAN architectures and improvements through iterative versions.

[!IMPORTANT] This project is a personal learning exercise in understanding and implementing different GAN techniques.

This project re-implemented GAN, WGAN and conditional GAN and explored the typical problems that occurred with GAN-based architectures like mode collapse and sensitivity to hyperparameters.

References

- The YouTube video series "Generative Adversarial Networks (GANs)", Aladdin Persson, at https://www.youtube.com/playlist?list=PLhhyoLH6IjfwIp8bZnzX8QR30TRcHO8Va

Setups

Environment:

- Python version: 3.x

- Framework: PyTorch

- Dataset: MNIST

- Runned on Google Colab

Experiments

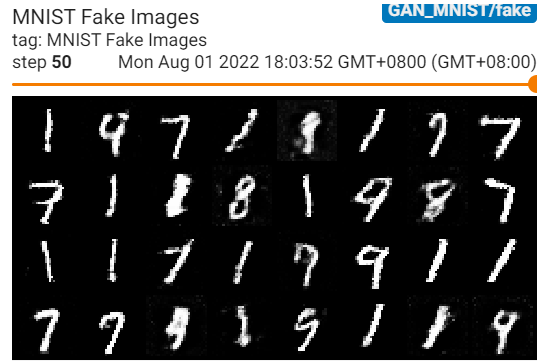

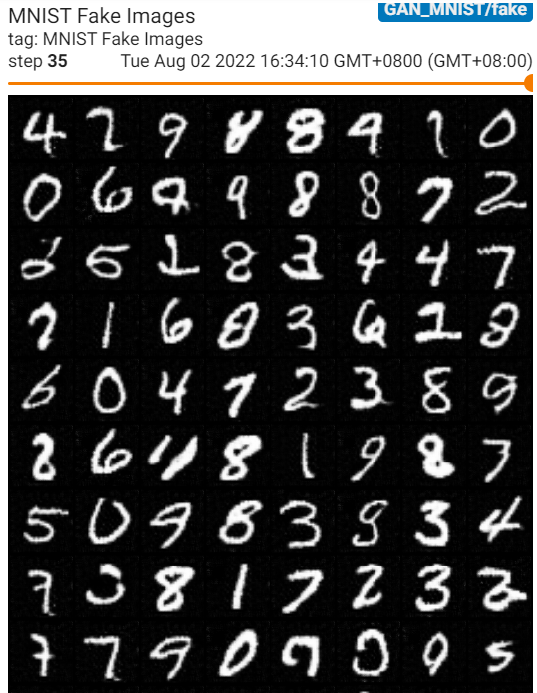

MNIST Digit Generation

Explored various GAN architectures:

- Standard GAN

- Wasserstein GAN (WGAN)

- Conditional WGAN

Key Experiments

- Experimented with different learning rates

- Observed the phoenomenon of mode collapse and the sensitivity of the GAN architecture to the learning rate

- Understanding the architecture of GAN, improvements made by WGAN, and also the principles of providing class conditions to GANs